Neuromorphic Computing: How the Brain-Inspired Technology Powers the Next-Generation of Artificial Intelligence

Brain-inspired computing for Machine Intelligence emerges as neuromorphic chips after over 30 years it was first developed.

As a remarkable product of evolution, the human brain has a baseline energy footprint of about 20 watts; this gives the brain the power to process complex tasks in milliseconds. Today’s CPUs and GPUs dramatically outperform the human brain for serial processing tasks. However, the process of moving data from memory to a processor and back creates latency and, in addition, expends enormous amounts of energy.

Neuromorphic systems attempt to imitate how the human nervous system operates. This field of engineering tries to imitate the structure of biological sensing and information processing nervous systems. In other words, neuromorphic computing implements aspects of biological neural networks as analogue or digital copies on electronic circuits.

Neuromorphics are not a new concept in any way. Like many other emerging technologies which are getting momentum just now, neuromorphics have been silently under development for a long time. But it was not their time to shine yet. More work had to be done.

Most recently, Intel and Sandia National Laboratories signed a three-year agreement to explore the value of neuromorphic computing for scaled-up Artificial Intelligence problems.

According to Intel, Sandia will kick-off its research using a 50-million neuron Loihi-based system that was delivered to its facility in Albuquerque, New Mexico. This initial work with Loihi will lay the foundation for the later phase of the collaboration, which is expected to include continued large-scale neuromorphic research on Intel’s upcoming next-generation neuromorphic architecture and the delivery of Intel’s largest neuromorphic research system to this date, which could exceed more than 1 billion neurons in computational capacity.

Upon the release of the agreement, Mike Davies, Director of Intel’s Neuromorphic Computing Lab, said: “By applying the high-speed, high-efficiency, and adaptive capabilities of neuromorphic computing architecture, Sandia National Labs will explore the acceleration of high-demand and frequently evolving workloads that are increasingly important for our national security. We look forward to a productive collaboration leading to the next generation of neuromorphic tools, algorithms, and systems that can scale to the billion neuron level and beyond.”

Clearly, there are great expectations on what the neuromorphic technology promises. While most neuromorphic research to this date has focused on the technology’s promise for edge use cases, new developments show that neuromorphic computing could also provide value for large, complex computational problems that require real-time processing, problem solving, adaptation, and fundamentally learning.

Intel, as a leader in neuromorphic research, is actively exploring this potential by releasing a 100-million neuron system, Pohoiki Springs, to the Intel Neuromorphic Research Community (INRC). Initial research conducted on Pohoiki Springs demonstrates how neuromorphic computing can provide up to four orders of magnitude better energy efficiency for constraint satisfaction –a standard high-performance computing problem– compared to state-of-the-art CPUs.

One of the goals of the joint effort aims to better understand how emerging technologies, such as neuromorphic computing, can be utilized as a tool to address some of the current most pressing scientific and engineering challenges.

These challenges include problems in scientific computing, counterproliferation, counterterrorism, energy, and national security. The possibilities are diverse and perhaps unlimited. As we can see, there are more applications than the ones one might have thought at the start.

Advance research in scaled-up neuromorphic computing is, at this point, paramount to determine where these systems are most effective, and how they can provide real-world value. For starters, this upcoming new research is going to evaluate the scaling of a variety of spiking neural network workloads, from physics modeling to graph analytics to large-scale deep networks.

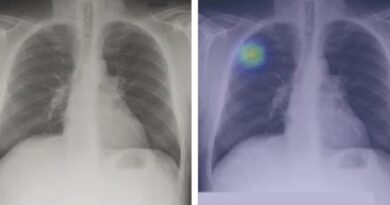

According to Intel, these sorts of problems are useful for performing scientific simulations such as modeling particle interactions in fluids, plasmas, and materials. Moreover, these physics simulations increasingly need to leverage advances in optimization, data science, and advanced machine learning capabilities in order to find the right solutions.

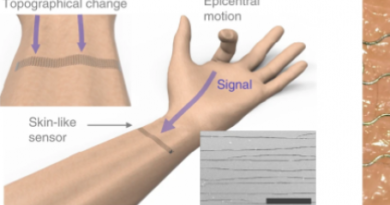

Accordingly, potential applications for these workloads include simulating the behavior of materials, finding patterns and relationships in datasets, and analyzing temporal events from sensor data. We can say, that this is just the beginning. There is yet to be seen what real-life applications are going to emerge.

Source:https://interestingengineering.com