Muscle signals can pilot a robot

Albert Einstein famously postulated that “the only real valuable thing is intuition,” arguably one of the most important keys to understanding intention and communication.

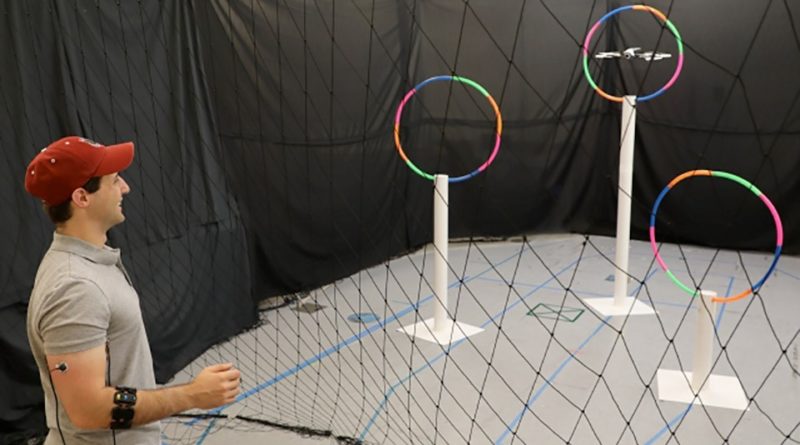

But intuitiveness is hard to teach — especially to a machine. Looking to improve this, a team from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) came up with a method that dials us closer to more seamless human-robot collaboration. The system, called “Conduct-A-Bot,” uses human muscle signals from wearable sensors to pilot a robot’s movement.

“We envision a world in which machines help people with cognitive and physical work, and to do so, they adapt to people rather than the other way around,” says Professor Daniela Rus, director of CSAIL, deputy dean of research for the MIT Stephen A. Schwarzman College of Computing, and co-author on a paper about the system.

To enable seamless teamwork between people and machines, electromyography and motion sensors are worn on the biceps, triceps, and forearms to measure muscle signals and movement. Algorithms then process the signals to detect gestures in real time, without any offline calibration or per-user training data. The system uses just two or three wearable sensors, and nothing in the environment largely reduces the barrier to casual users interacting with robots.

While Conduct-A-Bot could potentially be used for various scenarios, including navigating menus on electronic devices or supervising autonomous robots, for this research the team used a Parrot Bebop 2 drone, although any commercial drone could be used.

For the gesture vocabulary currently used to control the robot, the movements were detected as follows:

- stiffening the upper arm to stop the robot (similar to briefly cringing when seeing something going wrong): biceps and triceps muscle signals;

- waving the hand left/right and up/down to move the robot sideways or vertically: forearm muscle signals (with the forearm accelerometer indicating hand orientation);

- fist clenching to move the robot forward: forearm muscle signals; and

- rotating clockwise/counterclockwise to turn the robot: forearm gyroscope.

Machine learning classifiers detected the gestures using the wearable sensors. Unsupervised classifiers processed the muscle and motion data and clustered it in real time to learn how to separate gestures from other motions. A neural network also predicted wrist flexion or extension from forearm muscle signals.

This system moves one step closer to letting us work seamlessly with robots so they can become more effective and intelligent tools for everyday tasks,” says DelPreto. “As such collaborations continue to become more accessible and pervasive, the possibilities for synergistic benefit continue to deepen.

Source:http://news.mit.edu/